Sofya (Sofi) Dymchenko 🐭

Hi, salut, привет! I am a last-year Ph.D. student at University Grenoble Alpes and INRIA (France), I work with Bruno Raffin in Datamove team. I am a member of Melissa project which develops a framework for efficient large-scale deep surrogate training on HPC systems.

My thesis research question is:

“How to make training of data-driven surrogates more data-efficient, i.e., obtaining accurate surrogate with fewer generated simulations?”

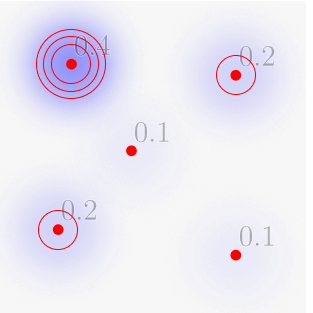

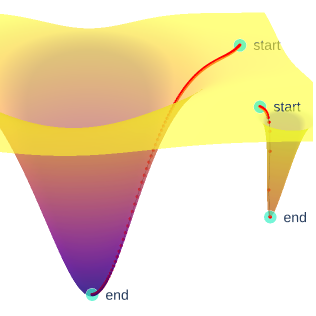

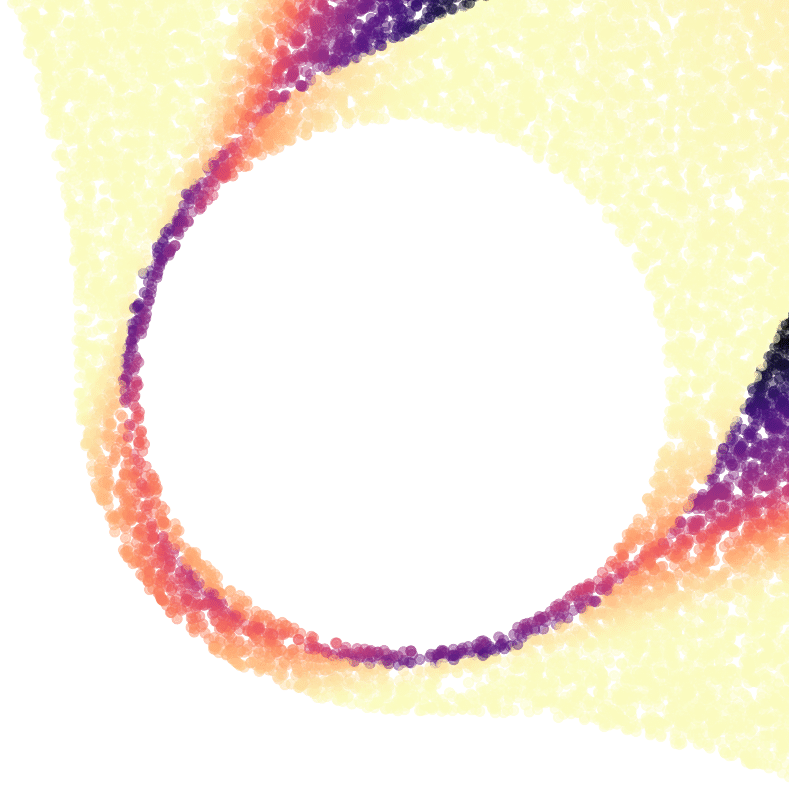

The cost of generating training data from physical solvers is a major bottleneck in scientific deep learning, it especially limits the scaling of deep surrogate models application to complex real-world problems. Compared to classical deep learning, with solvers we have the power to create data, but with such power comes the question: “Which data to generate?”. Current standard practice is to create the data by uniformly sampling input parameters of a solver. Is it really the best way? It is my thesis’s goal to close this gap. I developed an active learning method that reduces the number of training data simulations required by choosing more informative input parameters based on surrogate training loss. For more details, see publications in the list below and/or the recording of my presentation at SC 2024 [youtube-link].

After defending my thesis (February-March 2026), I plan to continue working in the field of AI for Science. I want:

- to work back-to-back in a team of passionate researchers and engineers,

- to apply my skills and knowledge to solving real-world scientific cases,

- to stay connected to the scientific community by publishing and presenting the results, and

- to keep learning and developing myself in this fast-evolving field.

Do not hesitate sending me a message to: sofya(dot)dymchenko(at)inria(dot)fr.

#openscience #inclusivescience #phdwithadhd

my publications

-

Loss-driven sampling within hard-to-learn areas for simulation-based neural network trainingIn MLPS 2023 - Machine Learning and the Physical Sciences Workshop at NeurIPS 2023 - 37th conference on Neural Information Processing Systems, Dec 2023

Loss-driven sampling within hard-to-learn areas for simulation-based neural network trainingIn MLPS 2023 - Machine Learning and the Physical Sciences Workshop at NeurIPS 2023 - 37th conference on Neural Information Processing Systems, Dec 2023 -

Poster: Loss-driven sampling for online neural network training with large scale simulationsSep 2023Poster

Poster: Loss-driven sampling for online neural network training with large scale simulationsSep 2023Poster